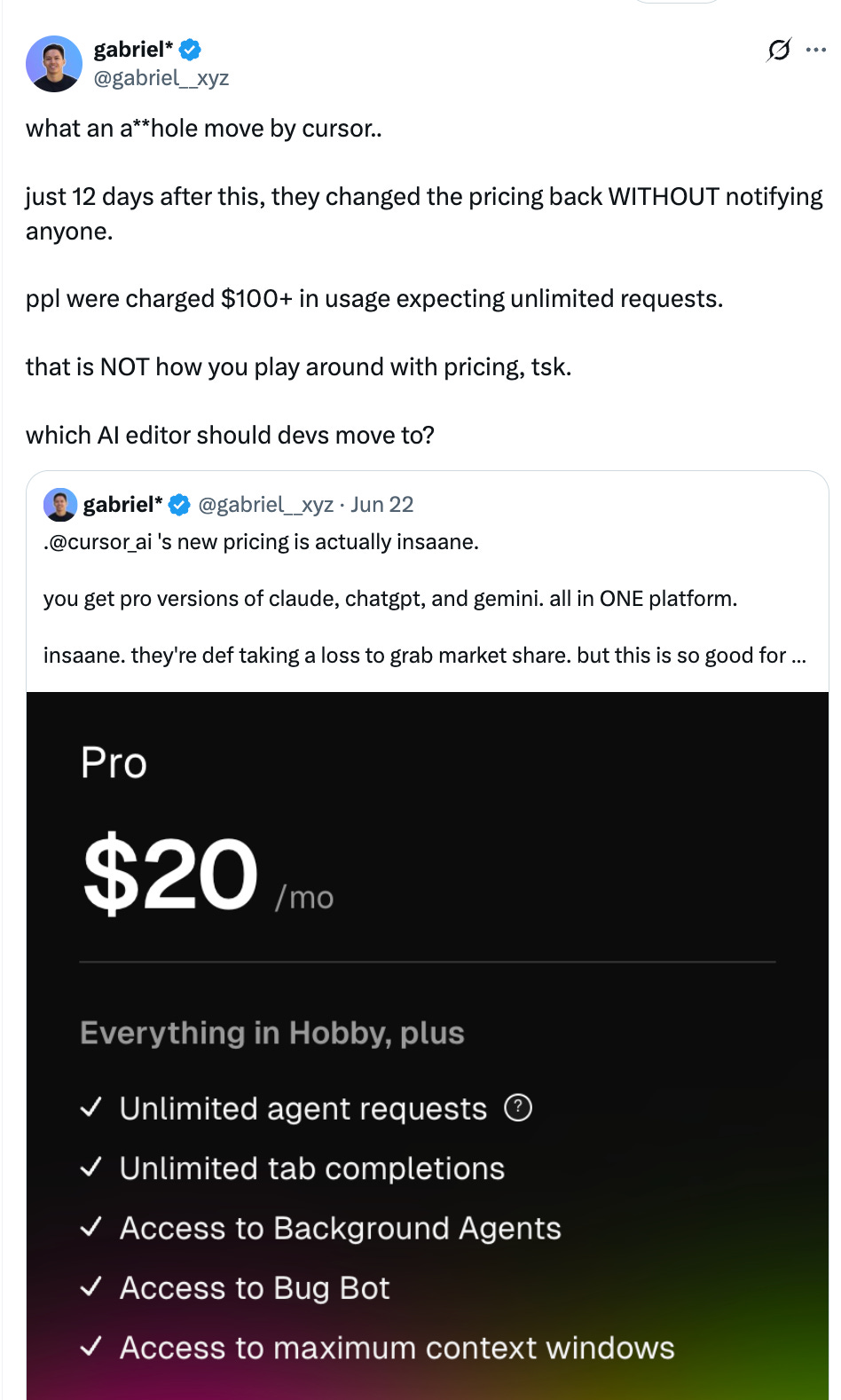

"Why did you charge me $100 without telling me?"

How can companies in the LLM space survive and communicate effectively during the next pricing reset?

The backlash around Cursor’s pricing wasn’t just a one-off mistake. To me, it feels like a sign of something deeper, a challenge that a lot of companies in the LLM space are quietly dealing with. Getting pricing right is honestly just as tough, maybe even tougher, than building a great product. You can make something super impressive, but if the price doesn’t match how users actually experience value, the whole thing can feel shaky. At the same time, I think we’re seeing a bigger shift in this space - the early days of generative AI were all about being generous, free tokens, high usage limits, and growth that looked amazing but was really just burning cash. That’s changing now - the economic reality is starting to hit, and it reminds me a bit of how cloud infrastructure pricing evolved - more metered usage, more cost visibility, and a lot more pressure to optimize. I am sure flat pricing/classic SaaS tier like models aren’t disappearing overnight, but the trend is clearly toward models that reflect real usage and are harder to game.

Right now, most vendors seem to be settling on two main price points. There’s a hobbyist/prosumer tier in the ~$10/$25 per month band, and then a “max”/power user tier that’s ~$100$200. I don’t think of these as old-school SaaS plans, but more like prepaid usage brackets, and I think they reflect the underlying costs that providers are juggling. What’s interesting is how quickly the lower tier is coming under pressure. Open-source and local models are improving fast, and honestly, it’s getting harder to justify paying for something when the free alternatives are good enough. The primary reason hobbyist band is still doing so well is because the “consumer” layer on the free alternatives isn’t very solid today, but i wouldn’t be surprised if the hobbyist tier keeps getting squeezed, very quickly getting to ~$0 for most use cases. That puts more weight on the higher tier, where people are still willing to pay, but only if it’s really worth it. And at the core, the overlap between tools becomes a real issue - if I’m already paying for Claude pro max, it’s tough to justify another just to try something similar, for an adjacent use case. And it’s not just about the money, switching is a hassle, especially when your data and context are stuck in one tool. But I think that’ll also change pretty soon as “context” becomes more portable.

Personally, I keep experimenting a lot - I’ve probably switched between 3-4 foundational stack I use in the last couple of months. But companies are different - once a tool is embedded in a team’s workflow, with approvals, compliance, and integration into internal systems, it’s not so easy to pull it out. That’s where I think most retention will happen, not just by delighting solo users, but by helping teams actually get work done with less friction.

So, as companies focus on building for that higher tier, the real question is, how do you stay sticky when the hobbyist end falls away? And what does it take to build a moat when free tools are getting better every week, and paid tools face more scrutiny than ever?

I keep coming back to the same ideas (and i know it sounds pretty obvious the way i frame it), but IMO":

the tools that last will be the ones that move from being interesting to being embedded - so, it’s not enough to just be a good chatbot/ conversation layer/ being able to drive clean UIs/repurpose assets etc..the winning products will tie directly into the user’s workflow, and they won’t just answer questions, but actually take action. If a model can file a Jira ticket, update Salesforce, or spin up Terraform, it’s not just responding, it’s actually operating. Once you have that, the cost of switching isn’t just financial, it’s operational. Integration is a big piece of this, deep, messy, real-world integration; if your tool can read and write in github, snowflake, notion, slack, not just through surface-level APIs, but in ways that actually fit how those systems are used (and how people use them), you start to build something that’s hard to replace. People underestimate how sticky complexity can be - once a product touches enough parts of the stack, pulling it out becomes a project no one wants to take on just to save a bit of money.

There’s also a growing need for flexibility with the models themselves. More teams want to bring their own weights, or fine-tune on private data without sending it to an external provider. Not everyone wants to run their own stack, and most won’t, but the desire to control costs and protect sensitive data is real. Vendors that make this easy, without needing deep ML expertise, will earn a lot of trust. On the financial side, folks from finance are asking tougher questions now, so flat caps and unlimited plans don’t work when spend starts to scale. Transparent pricing, with clear breakdowns per run or per token, becomes a real differentiator. Not because it’s exciting, but mainly because someone in procurement needs to make the numbers work. Being able to forecast spend, set alerts, and tie usage back to outcomes makes those conversations a lot easier.

Personalization is another lever, but i don’t mean it in the generic marketing sense - basically, if a tool learns from its users quickly, improves every day, and starts to mirror the language and patterns of an organization, it creates a sense of ownership. That feedback loop becomes a kind of organizational memory, and at that point you’re not just using a tool, you’re shaping it, and that kind of embeddedness (Fun fact: substack doesn’t recognize it as a word) doesn’t copy-paste easily to a competitor. All of this really assumes a team-centric lens, i.e. role-based workflows, approvals, audit logs, version control, these aren’t features that attract individual hackers, but they’re essential for teams doing real work. They’re often what separates a hobby project from something a business truly depends on.

And finally, needless to say, distribution matters more than we admit. The smartest model in the world loses out to the tool that’s right there where the work happens. If you’re one click away in VS Code, or a slash command in Slack, you’re part of the flow, and once you’re in the flow, you’re in the decision loop. There’s also a governance layer that becomes more important as tools scale inside companies. Pre-built guardrails, safe defaults, red-teaming, usage analytics, these don’t make headlines, but they make executive buy-in possible. These are the features that get you through security reviews, not the ones that get you trending on Twitter.

So, I don’t think the moat will come from having the biggest model or the lowest latency, but it’ll come from being useful, trusted, and deeply woven into how a team works. That’s harder to build, but it’s also much harder to rip out. We’re exiting out of the playground phase of LLMs, and the infrastructure bill is here. The tools that survive will be the ones that can justify their cost, not just with benchmarks, but with real outcomes.