Product ≠ Software

Why solopreneurs can thrive building profitable products through vibe coding while enterprise systems struggle.

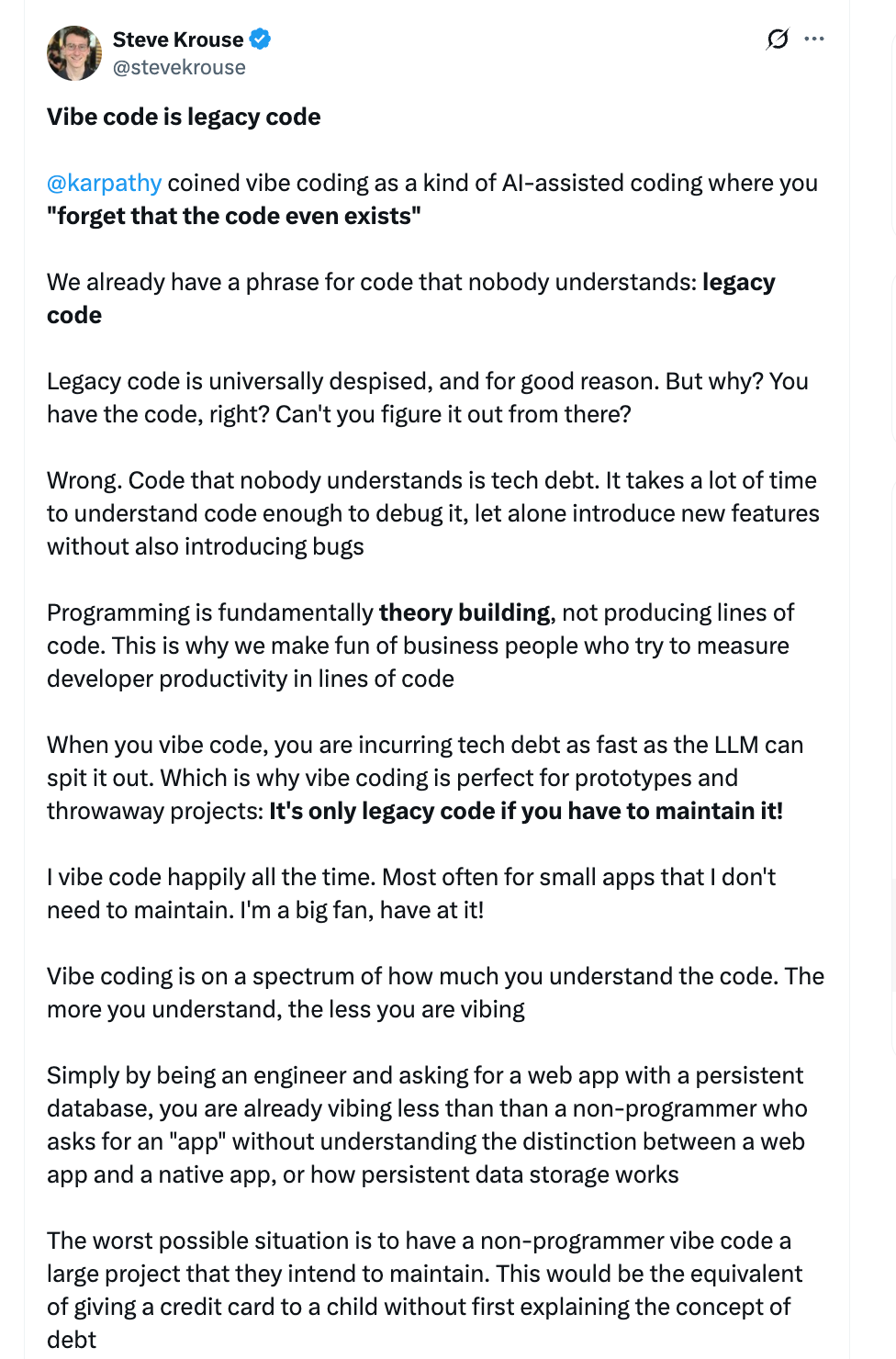

I came across a tweet from Steve Krouse recently that captured something important about the current state of AI-assisted programming. We’re in the middle of a vibe coding explosion, where AI tools excel at producing locally coherent code snippets. But many are misreading this progress, extrapolating it into grand claims about the end of human programmers

There's a hidden trap in this apparent productivity boom: the cost to write code has collapsed, but the cost to review code has exploded. In the past, if someone submitted a 200-line diff, you knew roughly how they got there. Now, people paste in a vague prompt, and out comes a 600-line orchestration module with imported packages no one asked for and assumptions no one agreed to. Reviewing that output is like spelunking in someone else's hallucinated architecture. Every review turns into vibe debugging, where you're not fixing a bug, you're fixing a mood.

This mismatch between generation speed and review complexity leads to two dangerous outcomes. First, systemic fragility: codebases fill with low-context patches that work locally but struggle to compose globally. The AI makes choices no one remembers making. Second, architectural debt by accretion: teams conflate "code that runs" with "code that scales." Vibe-coded systems optimize for the constraints they have dealt with oftentimes resulting in momentary shippability, not maintainability. Try prompting Claude to generate a Seaborn plot for churn insights- you’ll get 500 lines of syntactically perfect code, followed by 100 minutes of debugging just to make it compose properly and make business sense.

The fantasy of spec-to-code automation imagines programmers as mere translators who mechanically convert requirements into implementation. But this strawman programmer doesn't exist in practice. Every competent engineer I've encountered actively participates in problem definition, challenges assumptions, and iteratively refines solutions through the act of building them. Simon Willison has a brilliant articulation on this topic (the entire thread is gold).

Local optimization vs global coherence

I really liked the concept of local optimisation vs global coherence when i first came across it (here). Extrapolating it to what we’re witnessing now, Vibe coding excels at local optimization: solving bounded problems with minimal dependencies where correctness is contextually obvious and the blast radius remains small - consider writing a webhook handler, formatting API output, creating SQL queries, or building a frontend that allows ingesting a bunch of user inputs, making a few OpenAI calls and recommending the best Cafes and Restaurants. The problem space is well-defined, success criteria are explicit, and validation is immediate.

Complex engineering represents a fundamentally different class of problem: achieving global coherence across multiple interacting components under shared constraints like scalability, security, and failure recovery. This isn't merely one of scale; it's a categorical distinction.

The core issue is the context explosion problem. While local optimization requires understanding a bounded problem space, global coherence demands reasoning about exponentially expanding interaction surfaces. Consider building a compiler where each decision creates cascading constraints: the lexer's token design affects parser complexity, which influences AST structure, which determines optimization possibilities. A locally optimal choice at any level might make global optimization impossible.

Vibe coding doesn’t do great here because it operates through pattern matching against previously seen solutions. But truly complex systems often require novel constraint satisfaction, finding solutions that balance competing demands in ways that have no direct precedent.

Engineering at scale is often constraint-oriented: you're not only teaching the system how to do something, but you're also defining what must be true (latency < 300ms, idempotent retries, zero downtime deploys). These constraints interact across components and force tradeoffs that can't be easily vibe-coded. They need negotiation, abstraction, and architecture, not only generation. Good engineers solve for it by exhibiting mechanical sympathy - an intuitive alignment between the software they write and the underlying systems. Vibe coding has no such sympathy. It can write valid Kafka consumers that don't understand backpressure, or spawn microservices without accounting for network costs. It treats code as text, not as behavior in a live system.

This explains why solopreneurs can thrive building profitable products through vibe coding while enterprise systems struggle. The distinction is fundamental: products optimize for user value and market success; software systems optimize for reliability, maintainability, and evolution. The solopreneur model works through problem curation, selecting challenges solvable via local optimization while outsourcing global coherence problems (or, in a lot of instances even ignoring those, shipping demos that work on local machines and look good on AI created landing pages) . Crucially, solopreneurs can afford exploded review costs because they're reviewing their own AI output, personal context that doesn't transfer to teams where code review becomes reverse-engineering someone else's AI conversation.

Beyond code generation

The misconception stems from conflating code generation with software engineering. Code is the artifact, not the activity - real software engineering emerges from the dialectical relationship between problem and solution domains, where implementing solutions reveals new problem aspects.

I remain deeply optimistic about AI's potential to accelerate software delivery and help more people ship products quickly. But the timeline for truly autonomous software engineering is slightly longer than current hype suggests. The reasons are fundamental: mechanical sympathy can't be pattern-matched, constraint satisfaction requires domain expertise that emerges from years of operational experience, and architectural judgment develops through repeated exposure to system failures and recovery.

Obviously, we will train the models to build sympathy in due time, but even then some aspects of software engineering will never disappear: the need to understand how code behaves in live systems, the ability to reason about failure modes that haven't been documented, and the creativity required to find novel solutions to unprecedented constraint combinations. I don’t think these are implementation details that better models will eventually subsume. They're the irreducible core of what makes software engineering an enduring human discipline.