How should your Team work with AI?

The foundation for agentic workflows, collaborative systems, and shared intelligence for teams.

The internet is flooded with articles about prompt engineering, and more recently on context engineering (which should have been the de facto since day 1), but they're all focused on the same things - either (a) helping YOU —> helping you get better outputs, optimizing your personal AI workflow, making you more productive, or (b) building complex full-stack LLM infra for enterprises.

Almost nothing addresses the intermediate, messier, and more interesting challenge: how can teams actually work with AI together?

Goes without saying that AI is definitely a huge leveler - speeds things up, eliminates grunt work, and gives everyone superpowers, but there's wild variance in how people within the same organization, or even same team use it. While some folks ask brilliant, strategic questions, others treat it like Google. The query and context patterns are all over the place. And if you're a manager trying to upskill your workforce or get your team an edge, you quickly realize the real question isn't "how do I use AI better?" It's "how should my team work with AI?"

This braindump is my attempt at filling that gap. The principles we'll explore are the building blocks for everything from team collaboration to sophisticated AI agents and complex LLM infrastructure that organizations are building.

We'll go through these progressively - none works the ideal way in isolation, but together they create the foundation for systematic AI capabilities. We'll keep "context engineering" at the core since prompt engineering without proper context is pretty useless for real work. I always use this metaphor that a poorly crafted context is like sending the world's best translator into a room with muffled audio- the outcome will only be as clear as what it hears.

So, here's what I've learned about making this work - some of this may sound obvious, but bear with me.

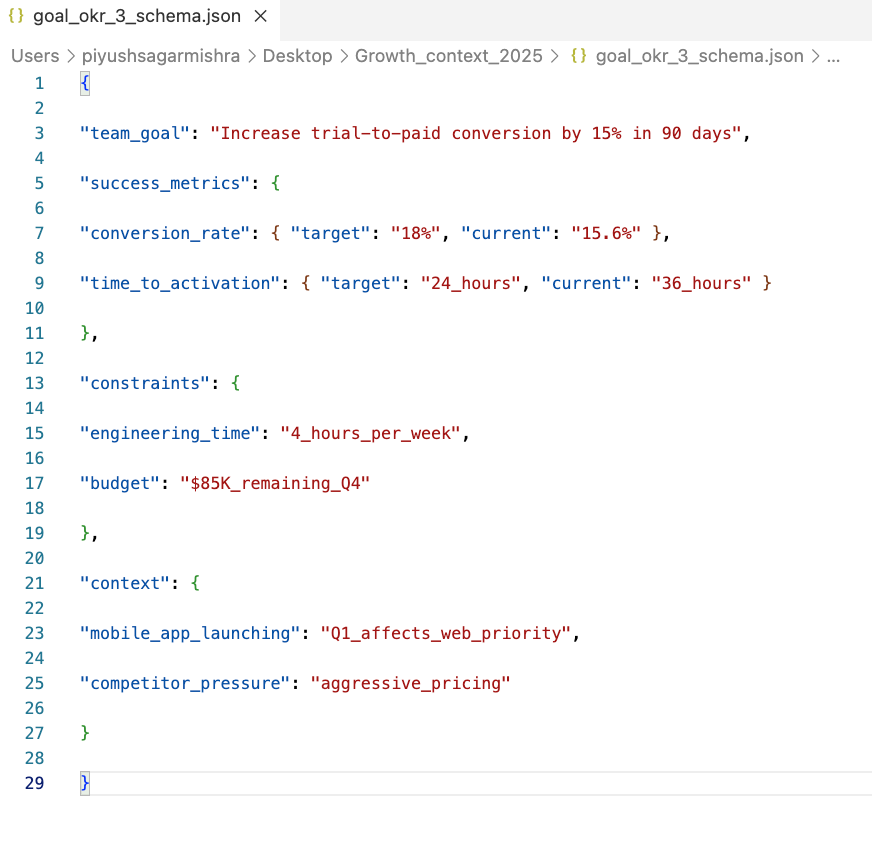

Principle 1: Define clear team goals with measurable success criteria

Teams need shared definitions of what success looks like, but most teams have never actually written down their objectives in a way that's both human-readable and machine-actionable. The difference between "improve conversion rates" and having structured goals that capture constraints, context, and success metrics is massive.

The magic happens when teams stop assuming everyone understands what "good performance" means and start having explicit conversations about it. Your growth lead might think success means hitting conversion targets, while engineering thinks it means not breaking anything, and product thinks it means user experience improvements. These aren't wrong perspectives - they just need to be reconciled into shared definitions.

Start by having each team member write down what they think the goal is, what constraints they're operating under, and what context affects success. Then spend time aligning on these definitions until everyone agrees on what you're optimizing for. Most teams discover they've been working toward slightly different objectives without realizing it. When engineering mentions they only have 4 hours per week for optimization work, and product shares that the mobile app launch affects web conversion priorities, suddenly your AI recommendations can factor in these real constraints instead of suggesting impossible solutions.

The key insight is making this centralized and accessible to everyone. When anyone on the team prompts AI for analysis or recommendations, they're pulling from the same shared understanding of what success looks like and what constraints exist.

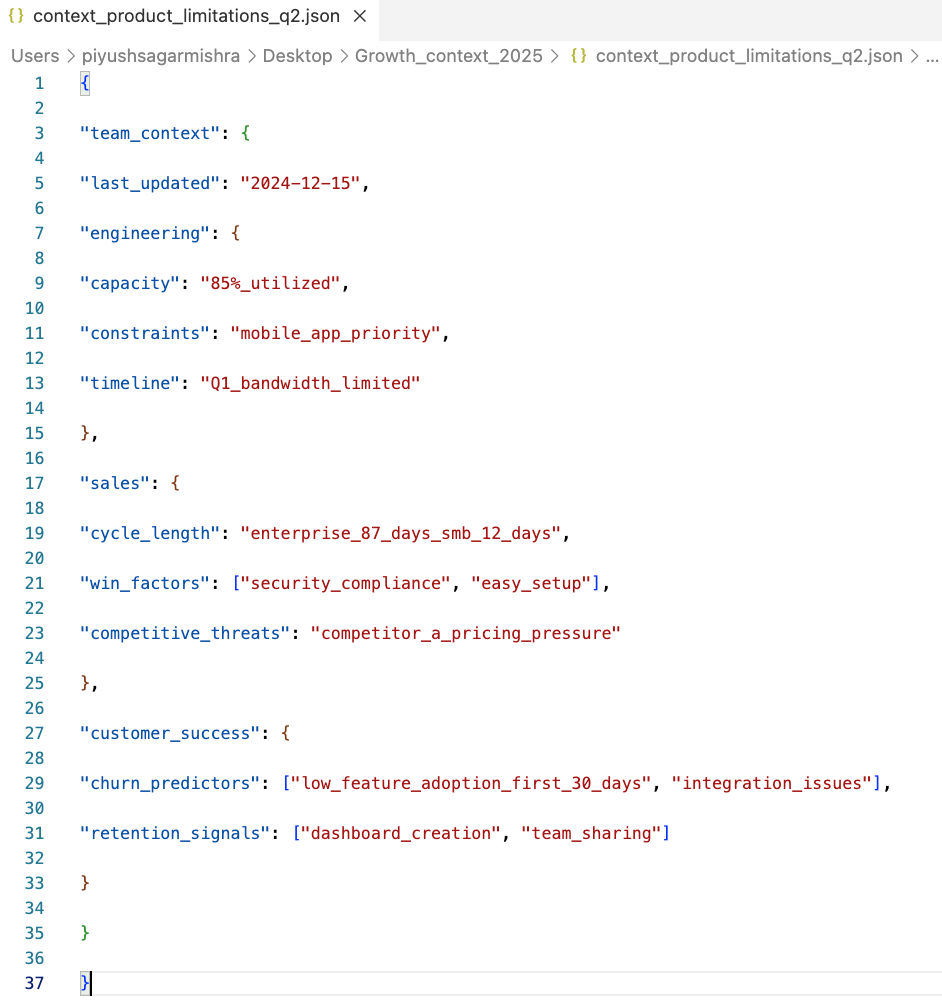

Principle 2: Engineer context like infrastructure

Think of LLMs as exceptionally knowledgeable consultants who know nothing about your specific business unless you explicitly brief them. They weren't in last quarter's strategy meeting, don't know your CEO's pricing philosophy, and have no understanding of what "activation" means in your product context.

Most teams treat context as optional background information rather than essential infrastructure. They feed AI raw data and expect meaningful insights, forgetting that data without narrative is just noise. This leads to what context engineering experts call "context failure modes" - e.g. context poisoning/distraction/confusion/clash etc. LLMs are like sous-chefs who don't know what's in your fridge - you must inventory your ingredients, write the precise recipe, and keep the knives within arm's reach.

But AI needs structured access to this intelligence to generate useful insights. Think of this as building a centralized context repository that everyone contributes to and benefits from.

The beautiful thing about building shared context is that you're probably already tracking most of this data - it's just scattered across different systems and people. Your CRM has sales cycle data, your customer success platform has churn indicators, your project management tools have engineering capacity information. The challenge isn't generating new data; it's extracting insights from what you already have and making it accessible.

If you don’t have a great analytics team setup, or lack an engineering support to automate these data-to-context pipelines, start by having each functional area pull their key metrics and insights from existing systems. Sales can share average cycle lengths from Salesforce, customer success can identify churn patterns from support tickets and usage data, engineering can assess capacity from sprint planning tools. The goal isn't perfection - it's creating a shared repository of organizational intelligence that everyone contributes to and uses.

This becomes incredibly powerful when it's centralized. Instead of each team member having their own understanding of "what's happening in the business," everyone works from the same context base. When your marketing manager asks AI to analyze campaign performance, it automatically knows that engineering is at 85% capacity and competitor A is pressuring on price, so recommendations fit organizational reality instead of being theoretically optimal but practically impossible.

The context structure doesn't need to be complex - just consistent. Teams that invest in building this shared intelligence find that their AI interactions become dramatically more useful because every recommendation accounts for real business constraints and opportunities.

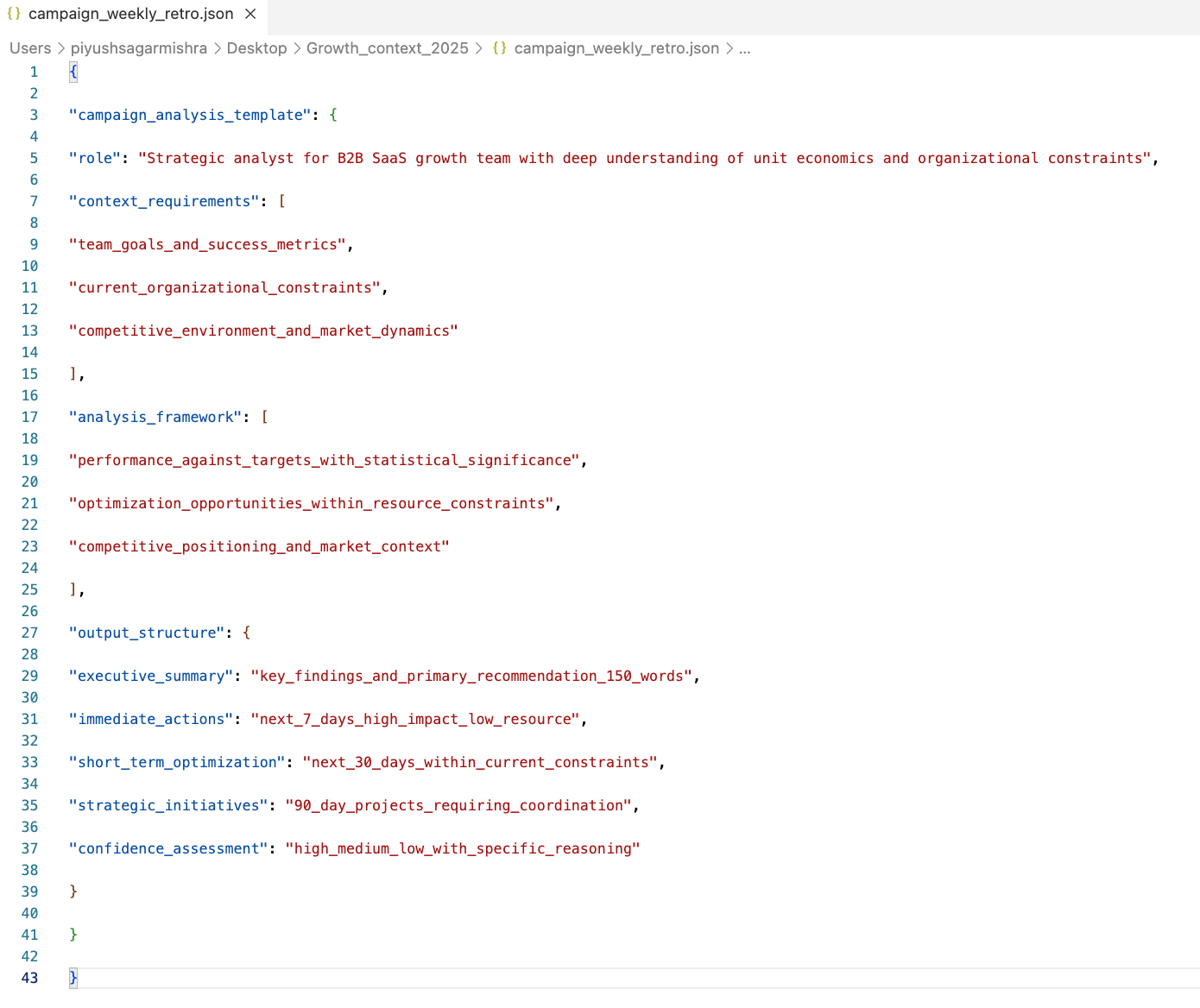

Principle 3: Build as systems, not one-off solutions: create reusable prompt templates your team can share

Most teams treat AI like a series of casual chats rather than a scalable system. Every prompt starts from scratch, past work gets lost, and there’s no compounding intelligence. While memory and reflection help with inter-turn context, a stronger foundation comes from treating prompts and context like code: structured, reusable, and constantly improvable. This means moving from ad-hoc prompting to modular design. Think in components, not conversations, and design each prompt to stack, evolve, and transfer across use cases.

Individual prompt optimization is like everyone writing their own Excel formulas instead of sharing the good ones. Teams that build libraries of proven prompts capture institutional knowledge and let anyone access sophisticated analysis frameworks.

The most effective approach is identifying the analysis types your team does repeatedly - competitive response, campaign optimization, customer research, product launch planning - and building templates that capture how your best thinkers approach these problems. Start by having team members share prompts that have generated particularly useful insights, then work together to identify the common elements and structural patterns.

What's powerful about the template approach is that it democratizes sophisticated thinking. When your junior growth marketer needs to analyze competitive threats, they can use the same analytical framework that your senior strategist would use, complete with the organizational context and constraints that make recommendations actually implementable.

When someone discovers a better way to structure competitive analysis or finds that adding specific context dramatically improves recommendations, updating the template benefits everyone's AI interactions.

This also creates consistency in how your team approaches problems. Rather than getting wildly different analytical approaches depending on who's doing the analysis, the templates ensure a baseline level of rigor and organizational awareness across all AI-assisted work.

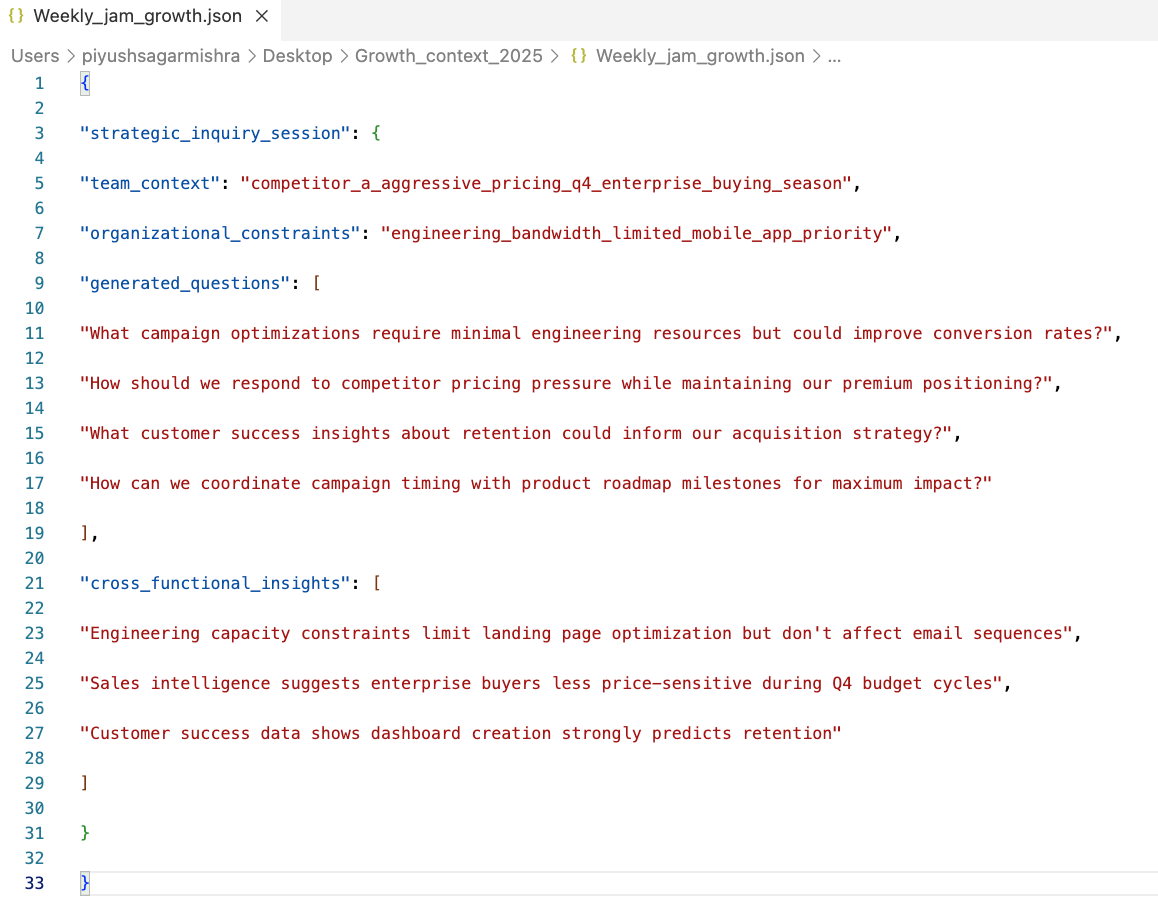

Principle 4: Start with questions, not answers: get your team to start implementing inquiry-response patterns before execution

Most teams use AI like a more sophisticated Google - asking specific questions and expecting specific answers. But the real power comes from using AI to help your team identify what questions you should be exploring together, especially questions that emerge from the intersection of different functional perspectives.

Traditional interactions follow a command-response pattern: human asks, AI answers. Advanced practitioners implement an inquiry-first approach where AI helps clarify objectives, question assumptions, and explore alternatives before providing analysis. Even in real life teams, the best partnerships start with 'What should we be asking?' and "How should we be working together," not 'Here's what I need you to do.'

The shift from individual questioning to collaborative inquiry happens when teams start AI sessions with context-setting rather than specific requests. This approach surfaces blind spots and connections that individual team members might miss. When AI suggests exploring how customer success retention insights could inform acquisition targeting, it's connecting dots between functional areas that might not naturally collaborate. When it identifies the tension between engineering bandwidth constraints and optimization opportunities, it's highlighting trade-offs that need explicit team decision-making.

The most productive teams make this a regular practice. Monthly strategic inquiry sessions where the team uses AI to surface questions they should be investigating together, quarterly sessions where they explore longer-term uncertainties and opportunities. The key is treating AI as a strategic thinking partner that helps teams ask better questions, not just answer the questions they already know to ask.

Principle 5: Be great at role-based model configurations (i.e. setting persona-specific system prompts with domain constraints)

AI that adopts specific professional identities - with the mental models, priorities, and constraints of those roles - generates substantially more relevant and actionable outputs. Sander Schulhoff, the OG prompt engineer, called out the impact of role-based prompting on the quality of outputs (go to 18th minute to hear directly from him), and he’s right that it may not make sense in a lot of scenarios- but then when it comes to supplying the role with enough context, there are enough instances of incredible gains in the output.

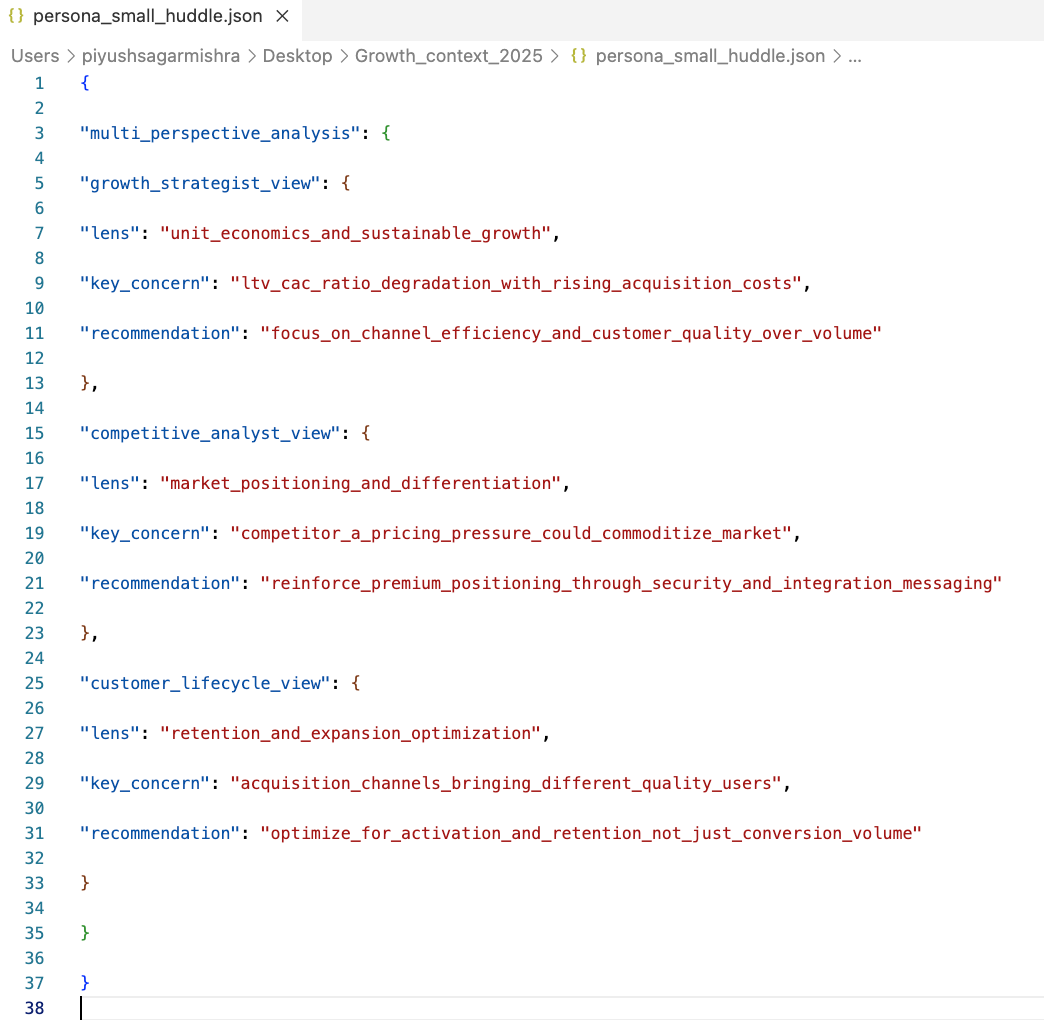

Getting different expert viewpoints on the same challenge reveals trade-offs and opportunities that single-lens analysis misses. The goal isn't to get one "right" answer, but to understand how the same situation looks through different strategic lenses.

Teams should run the same strategic challenge through multiple analytical lenses and personas simultaneously, then discuss where the perspectives align and where they create tension. Start by defining what each perspective should focus on based on your team's actual functional expertise - your growth strategist cares about unit economics, your competitive analyst focuses on market positioning, your customer success lead thinks about lifecycle optimization.

When all three perspectives agree on a recommendation, you have high confidence. When they conflict - like growth strategy saying "increase spend" while customer lifecycle says "focus on quality" - you have identified the strategic trade-offs that need explicit team decision-making. These tensions aren't problems to solve; they're the interesting strategic questions that determine your approach.

The key insight is that different functional perspectives reveal different aspects of the same strategic reality. Your growth strategist sees that rising acquisition costs are pressuring unit economics, your competitive analyst sees that pricing pressure could commoditize the market, your customer lifecycle expert sees that different channels bring different quality users. All three are right - the strategic question is how to balance these realities.

Principle 6: Make AI reasoning transparent and checkable

I’ve previously written about this (How the hidden hurdle to scaling vibe is architecting human nose for error): the most dangerous outputs aren't obviously wrong - they're confidently wrong in ways that bypass human skepticism. LLMs can generate sophisticated analyses with fictional citations, reasonable-sounding stats from non-existent studies, and logical conclusions based on flawed assumptions. This becomes exponentially more complex in agentic workflows where multiple AI systems make sequential decisions and errors compound rapidly without systematic verification.

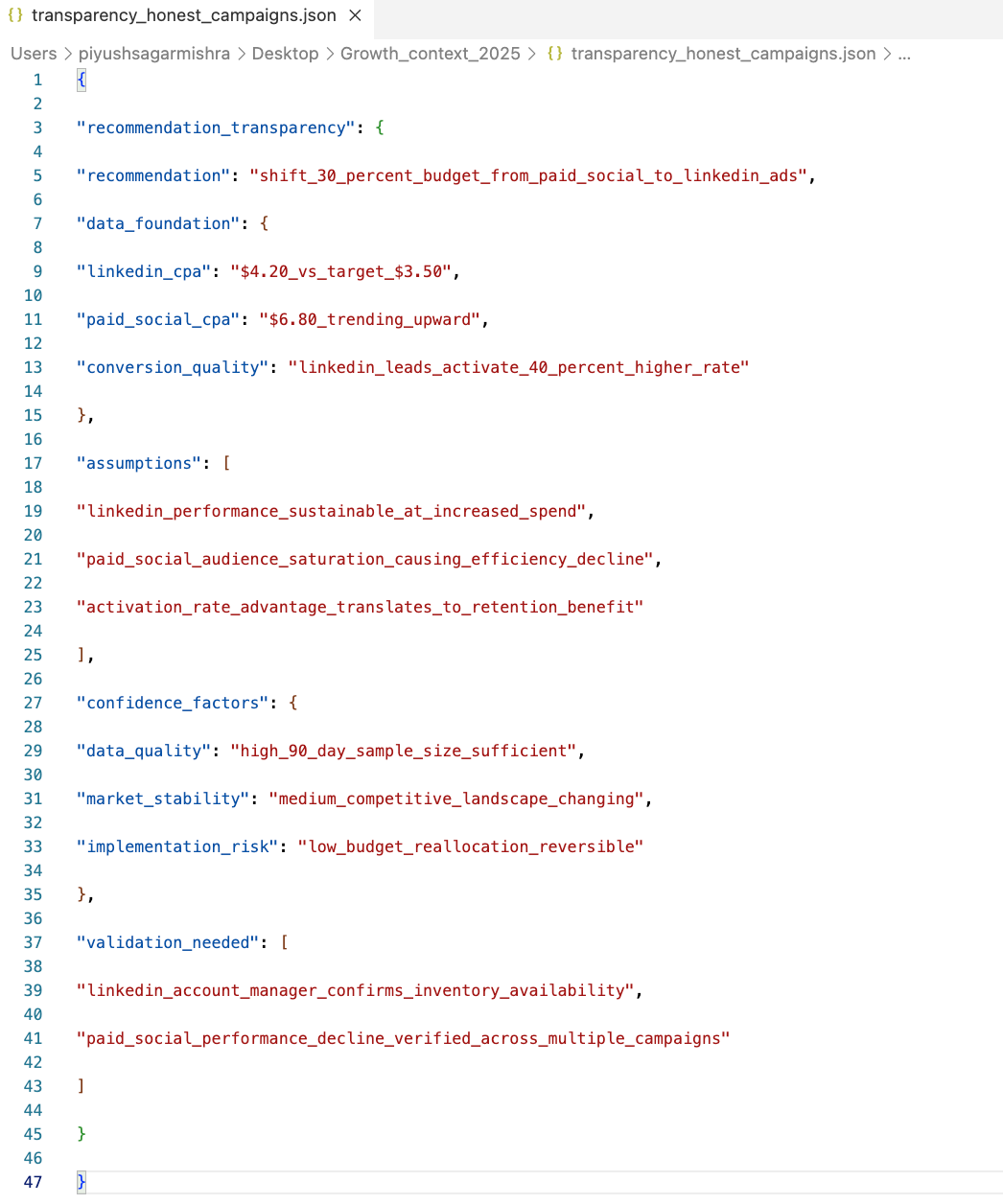

Building transparency into AI reasoning means your team can validate assumptions, check logic, and trust recommendations because they understand how conclusions were reached.

Transparency starts with teams demanding that AI show its work, not just its conclusions. When AI recommends shifting budget allocation, teams need to see the data foundation (LinkedIn CPA vs paid social CPA), understand the assumptions (that LinkedIn performance will scale), and know what validation is needed (account manager confirmation of inventory availability).

The real power (and also closure to the build cycle) comes from having team members with domain expertise validate different aspects of the reasoning. Your paid social expert can assess whether audience saturation explains performance decline, your LinkedIn specialist can evaluate scalability assumptions, your analytics lead can confirm data quality and statistical significance. Each team member contributes their expertise to evaluating the recommendation's foundation.

This creates a culture where AI recommendations become starting points for informed team discussion rather than final answers. When the reasoning is transparent, teams can identify where they agree with the logic, where they have concerns, and what additional information they need to make confident decisions.

Principle 7: Learn from what works and what doesn't - Set up feedback/error handling mechanisms

The best AI outputs aren’t the most confident, they’re the most honest about uncertainty. False confidence is dangerous and compounds pretty quickly in agentic systems, turning small errors into systemic failures. Smart teams distinguish between types of uncertainty - epistemic uncertainty can be reduced with more data, aleatoric must be managed, and ambiguity demands human judgment. The highest-value AI response, which systems should aspire to get to, is often, “I don’t know, but here’s how we can find out.”

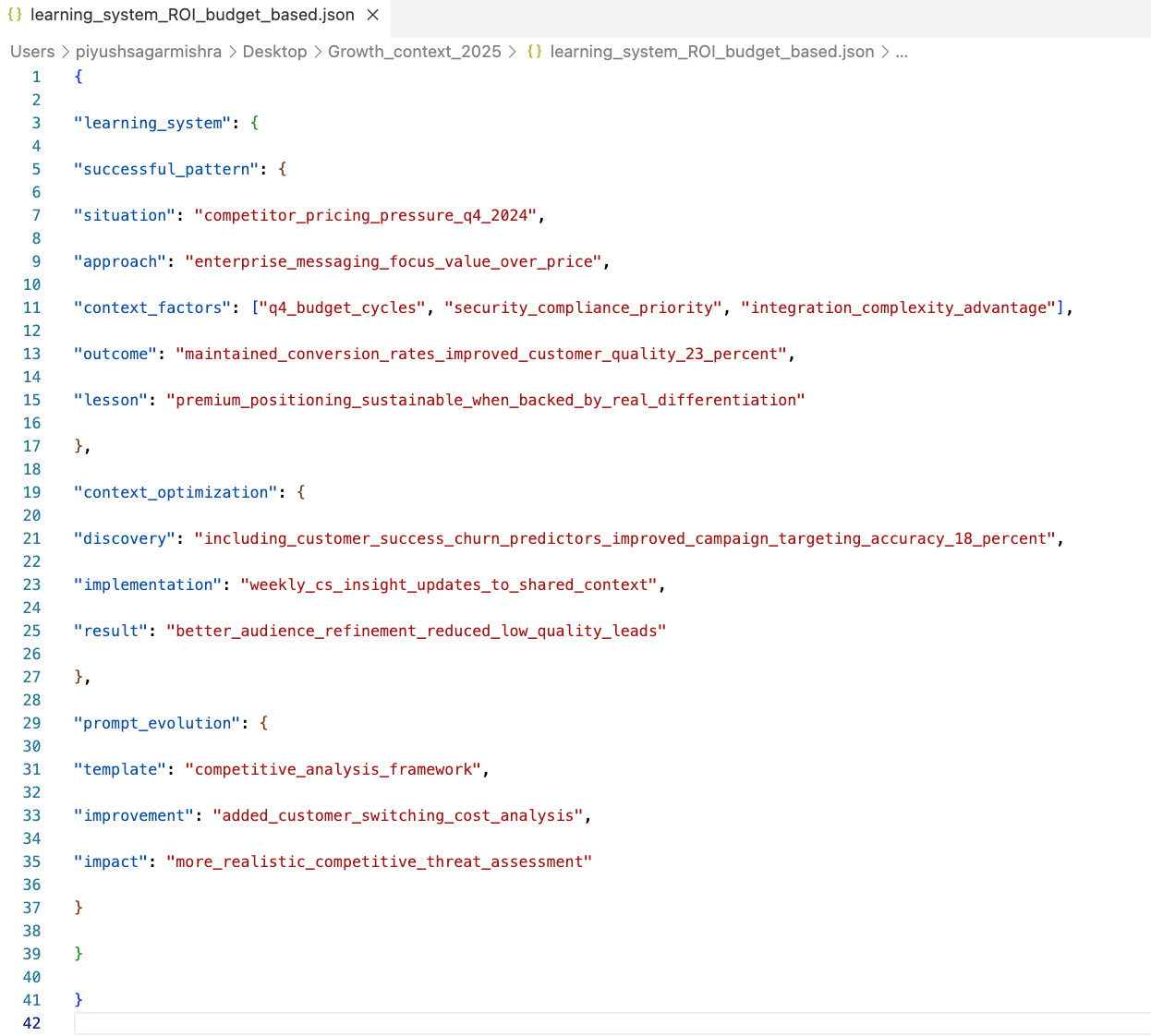

By systematically tracking what works, what doesn't, and why, teams build institutional memory that compounds over time.

Learning happens when teams systematically track the outcomes of AI-assisted decisions and extract patterns from what works. Start by documenting significant recommendations and their results - not just whether they succeeded or failed, but what contextual factors contributed to the outcome.

The goal is building institutional memory that survives team changes and compounds organizational intelligence. When your team discovers that including customer success insights about churn predictors improves campaign targeting accuracy by 18%, that learning should benefit all future AI interactions. When a competitive response strategy works particularly well, the specific approach and context should become part of your team's strategic playbook.

This creates a feedback loop where your team's AI capabilities improve over time. Context gets richer as you learn what organizational intelligence is most valuable. Prompts and contexts get more sophisticated as you understand what analytical frameworks produce the best results. Decision-making gets better as you accumulate patterns about what works in specific contexts.

The key is making this learning systematic rather than ad hoc. Monthly retrospectives on AI-assisted decisions, quarterly reviews of what context and prompts are performing best, annual assessments of how your team's AI capabilities have evolved. Teams that invest in systematic learning build AI capabilities that compound and become increasingly difficult for competitors to replicate.

Building systematic team AI capabilities

The progression from individual AI use to sophisticated team capabilities happens when teams build shared infrastructure: common goals, centralized context, reusable prompts, collaborative inquiry, multi-perspective analysis, transparent reasoning, and systematic learning.

The JSON examples throughout these principles aren't meant to be intimidating or examples of best-in-class; they're just illustrations of how to structure information so AI can use it effectively and teams can maintain it over time. Most teams can start with simple shared documents that capture the essential elements, then evolve toward more sophisticated systems as their capabilities develop.

The key insight is that effective team AI isn't about having the smartest individual prompts or the most advanced technical setup. It's about building systematic approaches to capturing and leveraging collective intelligence. If you're just starting, don’t chase complexity, but focus on mastering context engineering - how to write, select, compress, and isolate context (good starting kits and intro here from Langchain, and here from DAIR.ai). These skills are the scaffolding for everything that follows. Teams that get this right early will build AI capabilities that compound over time and become increasingly hard to copy.

Further reading:

https://blog.langchain.com/how-and-when-to-build-multi-agent-systems/

https://www.anthropic.com/engineering/built-multi-agent-research-system

https://github.blog/ai-and-ml/llms/the-architecture-of-todays-llm-applications/

https://www.martinfowler.com/articles/engineering-practices-llm.html

https://www.llamaindex.ai/blog/context-engineering-what-it-is-and-techniques-to-consider

https://www.innoq.com/en/blog/2025/07/context-engineering-powering-next-generation-ai-agents/