Large scale a/b testing, quirks of data people and bots

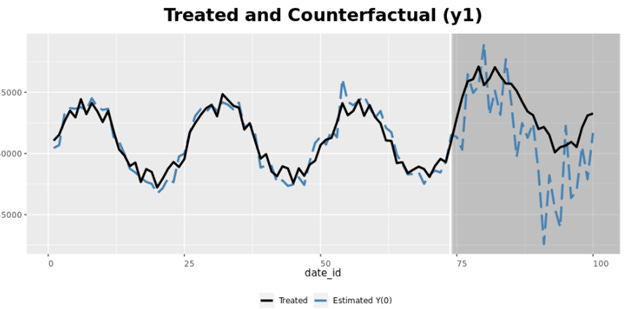

Leveraging conterfactuals to drive roadmaps when you can’t do a/b testing

A Day in the Life of a Content Analytics Engineer: Part of Netflixs’s blog on their analytics team and roles

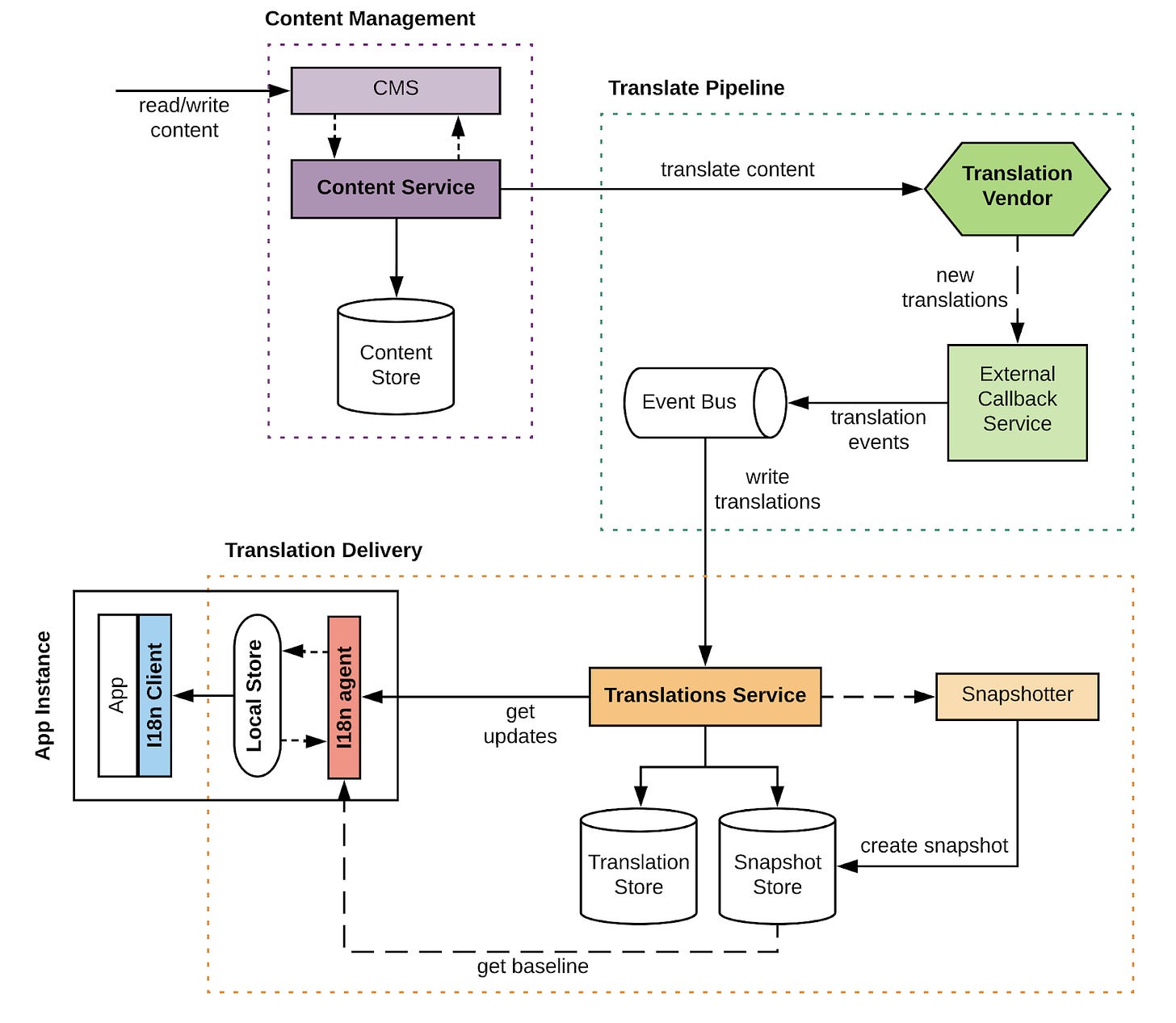

Internationalization is the process of adapting software to accommodate for different languages, cultures, and regions (i.e. locales), while minimizing additional engineering changes required for localization. This article highlights how Airbnb’ built its Internationalization (I18n) Platform in support of that vision, by serving content and their translations across product lines to our global community in an efficient, robust, and scalable manner.

In “Data Valuation Using Deep Reinforcement Learning”, accepted at ICML 2020, Google addresses the challenge of quantifying the value of training data using a novel approach based on meta-learning. Their method integrates data valuation into the training procedure of a predictor model that learns to recognize samples that are more valuable for the given task, improving both predictor and data valuation performance.

How should your company structure the data team: Real-life learnings from five data team iterations: centralized, embedded, full-stack, pods and business domains.

GDMix (Generalized Deep Mixed model) is a solution created at LinkedIn to train high cardinality personalised ranking engines efficiently. It breaks down a large model into a global model (a.k.a. “fixed effect”) and a large number of small models (a.k.a. “random effects”), then solves them individually. This divide-and-conquer approach allows for efficient training of large personalization models with commodity hardware.

Github link here

A data engineer is interested in seeing how his latest experiment influenced latency. A product manager wants to see country-based usage trends over the past quarter. A production engineer wants to list task crashes grouped by the owning team. Each of these people knows that the information is available somewhere, but the knowledge of where to look is often limited to a small number of senior employees.

In this article Facebook explains about its data discovery platform, Nemo, that is built upon Unicorn, the same search infrastructure used for the social graph.

Bonus read: How airbnb’s data discovery stack works

Creating a standard for benchmarking probabilistic programming languages: PPL Bench is an open source benchmark framework for evaluating probabilistic programming languages (PPLs) used for statistical modeling. Researchers can use PPL Bench to build their own reference implementations (a number of PPLs are already included) and to benchmark them all in an apples-to-apples comparison. It’s designed to provide researchers with a standard for evaluating improvements in PPLs and to help researchers and engineers choose the best PPL for their applications.

How to make a chatbot that isn’t racist or sexist : Tools like GPT-3 are stunningly good, but they feed on the cesspits of the internet. How can we make them safe for the public to actually use?

Integration is by far the most important criteria when selecting martech — and it’s changing the industry

Google recently announced ways to blur and replace your background in Google Meet, which uses ML to better highlight participants regardless of their surroundings. Whereas other solutions require installing additional software, Meet’s features are powered by cutting-edge web ML technologies built with Media Pipe that work directly in your browser — no extra steps necessary.

Other related references here (blur), here (replace) and here (media pipe)

A University of Kansas interdisciplinary team led by relationship psychologist Omri Gillath has published a new paper in the journal Computers in Human Behavior showing people's trust in artificial intelligence (AI) is tied to their relationship or attachment style.

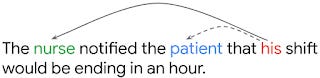

Here’s a gist of Google’s case study on BERT and its low-memory counterpart ALBERT, looking at correlations related to gender, where they formulate a series of best practices for using pre-trained language models. They present experimental results over public model checkpoints and an academic task dataset to illustrate how the best practices apply, providing a foundation for exploring settings beyond the scope of the listed case study.

Google indicated that it will soon release a series of checkpoints, Zari1, which will reduce gendered correlations while maintaining state-of-the-art accuracy on standard NLP task metrics.

Let's stop fooling ourselves. What we call CI/CD is actually only CI.

To see what makes AI hard to use, ask it to write a pop song