tuning and turing, fakeethics and attention modeling

Creating this newsletter takes hours of effort each week. Forwarding this to someone who’d find it useful - 30 seconds.

❤️ Support this project! Share this with 3 friends.

🚀 Got this forwarded from a friend? Sign up here

OpenAI - mess or not?: The AI moonshot was founded in the spirit of transparency. This is the inside story of how competitive pressure eroded that idealism.

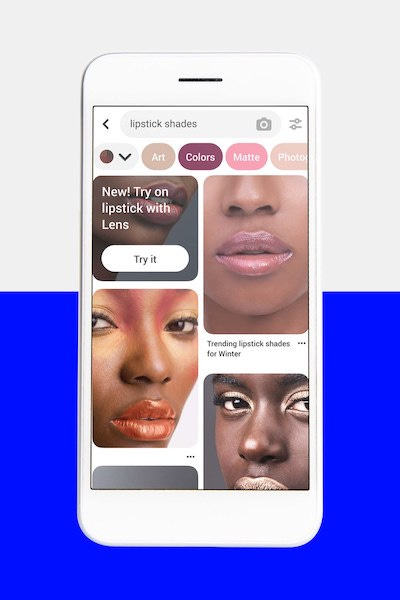

Pinterest steps up e-commerce efforts with AR try-on

5 fantastic data engineering projects

What the heck does attention even mean in natural language processing?

Get Covid19 queries answered through Haptik’s new (free) chatbot

How fivethirtyeight fixed an issue with their primary forecasting engine for candidates’ demographic strengths

Google launched Colab Pro, a $10/month subscription that gives you cloud Jupyter notebooks with faster GPUs + TPUs, longer runtimes, and more memory.

Where and how long should you turn the knob to get hot water in the shower

AI fooling AI - hard fool or foolhardy?

When do (can) predictions become policies?

Kickass datasets and other resources for all things NLP

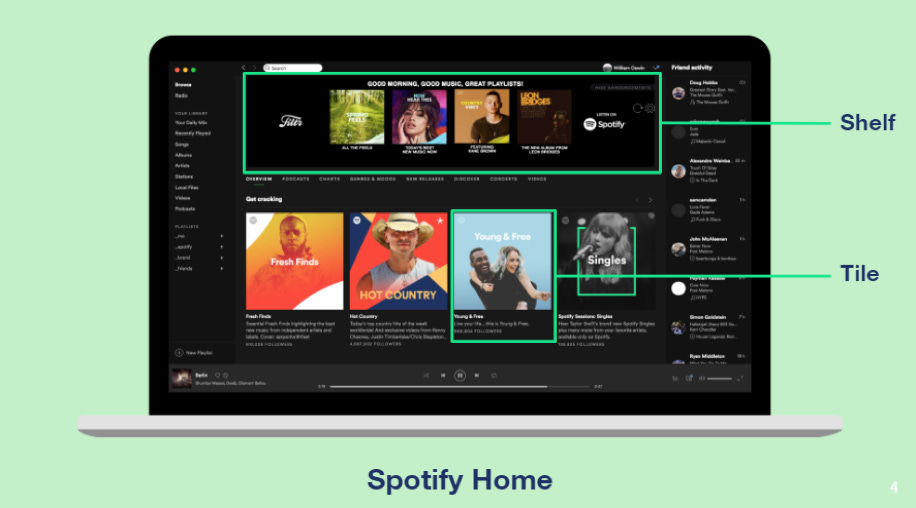

Personalising spotify home using machine learning

Hand labelling data will be passe’?

Driving stakeholder engagement in enterprises using interactive hypotheses canvas

Turing-NLG: A 17-billion-parameter language model by Microsoft is here

Another drill down into hyperparameter tuning

Do we need more data or more science in data science?

Airflow vs. Luigi OR Airflow and Luigi

Advanced analytics platforms - leaders and laggards/ MQ2020 trends

Making experimentation real - An a/b test for a/b tests